Posts

AI in agile project management: what's actually working in 2026

Matt Lewandowski

Last updated 09/02/20267 min read

Sprint planning and estimation

What doesn't work yet

Retrospectives

Standups and daily check-ins

Backlog management

Where AI falls short in agile

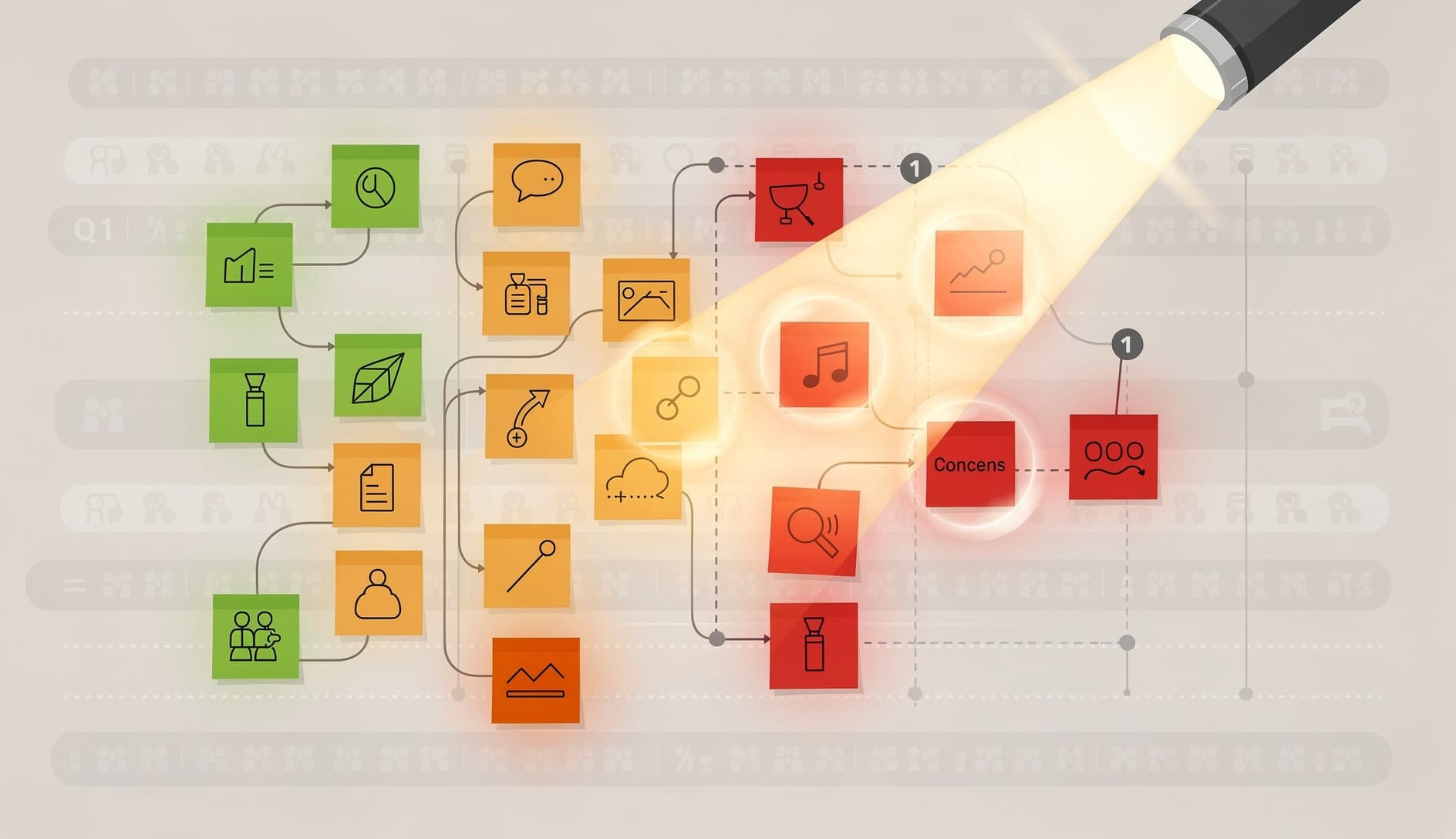

A practical approach to adding AI to your agile workflow

| Agile practice | High-value AI use | Low-value AI use |

|---|---|---|

| Sprint planning | Historical estimation data, pattern analysis | Fully automated story point assignment |

| Retrospectives | Cross-sprint theme tracking, sentiment clustering | Automated action item generation |

| Standups | Multi-day summarization, blocker detection | Replacing human check-ins entirely |

| Backlog grooming | Duplicate detection, stale item flagging | Automated priority ordering |

| Sprint review | Progress summarization, metric visualization | Automated stakeholder communication |