Posts

AI agents in your sprint: how copilots are changing what a story point means

Matt Lewandowski

Last updated 10/02/20267 min read

The problem isn't velocity inflation

What teams are actually experiencing

Story points aren't broken, but they need recalibration

Separate "coding effort" from "delivery effort"

| Phase | AI impact |

|---|---|

| Understanding requirements | Minimal |

| Writing code | High (2-10x faster on routine work) |

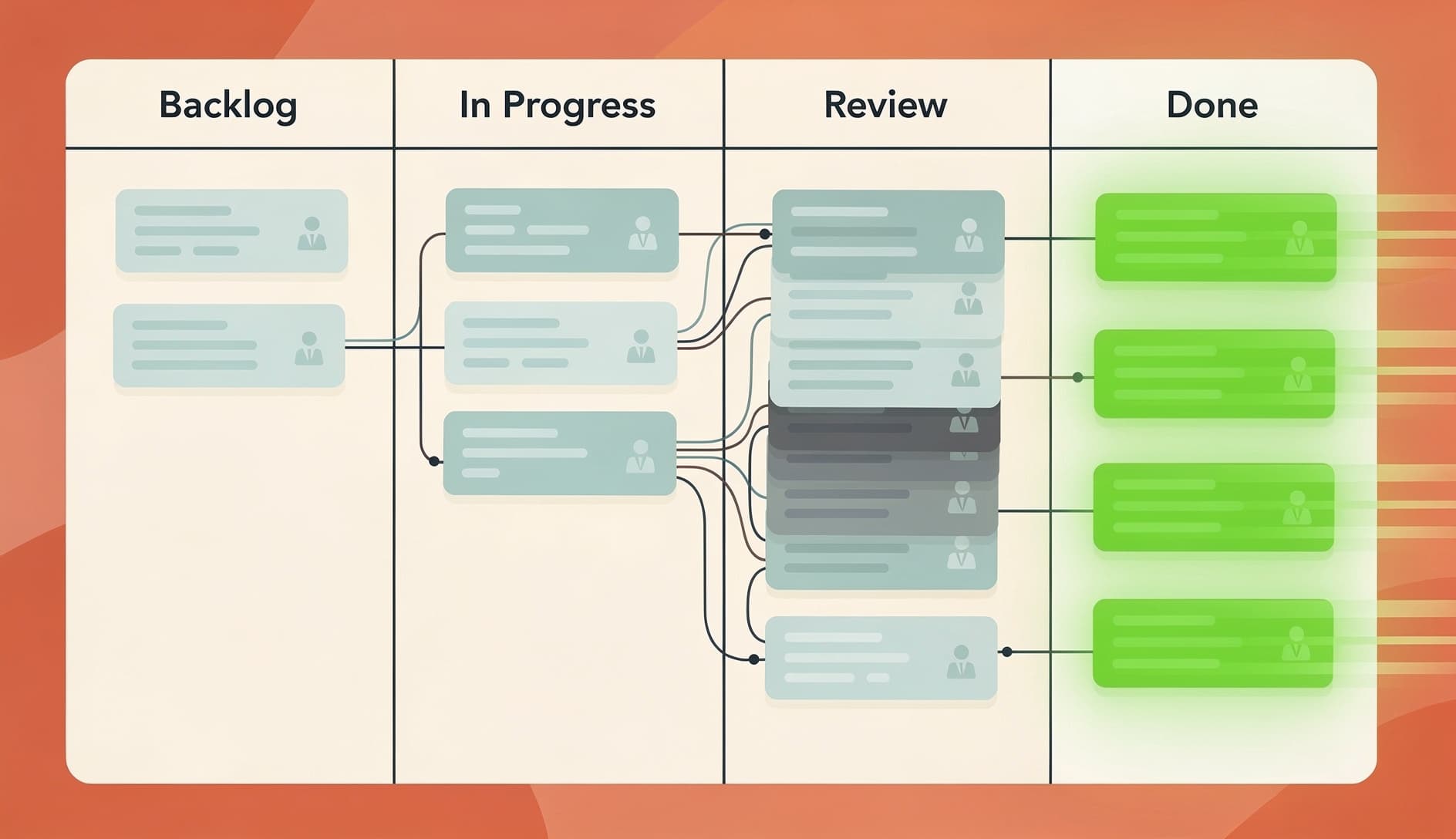

| Code review | Negative (more code to review, often lower quality) |

| Testing | Moderate (AI can generate tests, but someone has to verify them) |

| Integration and deployment | Minimal |

Recalibrate your reference stories

Other ways to measure